Rachel Lau and J.J. Tolentino work with leading public interest foundations and nonprofits on technology policy issues at Freedman Consulting, LLC. Rohan Tapiawala, a Freedman Consulting Phillip Bevington policy & research intern, also contributed to this article.

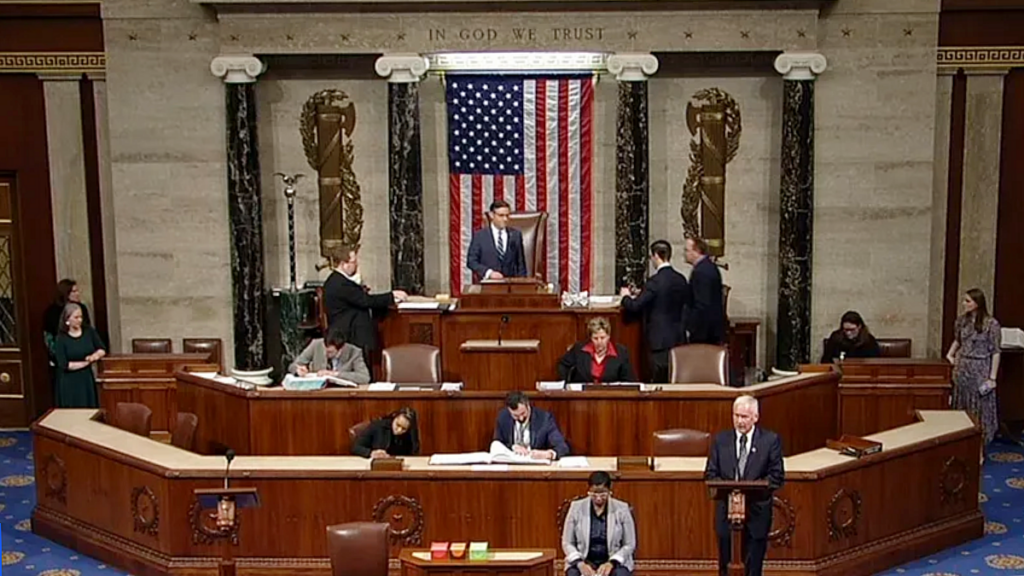

US House of Representatives voting for the “Protecting Americans from Foreign Adversary Controlled Applications Act” on March 13, 2024.

Just as much of the country is experiencing the indecision between the cold of winter and the warmth of spring, movement on tech topics came in fits and starts during the past month in both the US legislative and executive branches. In Congress, bills on TikTok and data privacy passed the House but faced unlikely prospects in the Senate. On the executive branch side, President Biden’s State of the Union address mentioned key tech policy priorities, and the Office of Budget and Management (OMB) issued its guidance on AI in the last days of March.

In the judiciary, the Supreme Court held oral arguments for Murthy v. Missouri this month, a case related to White House efforts urging social media companies to take down alleged disinformation about elections and COVID. A majority of the court indicated skepticism about the claim that Biden officials violated the First Amendment by asking social media platforms to suppress or delete posts. A wide range of stakeholders submitted amicus briefs ahead of the case. The Supreme Court’s decision in Murthy v. Missouri could have a major impact on the future of online content moderation.

Read on to learn more about March developments with TikTok bans and data privacy, the OMB AI guidance memo, tech mentions in the State of the Union, and more.

House Passes Two Tech Bills to Protect Americans from “Foreign Adversaries”

Summary: This month, the House of Representatives passed two landmark tech bills to safeguard Americans’ data from being accessed by foreign governments. The House passed the Protecting Americans from Foreign Controlled Applications Act (H.R. 7521) in a 352-65 vote on March 13, which would force China-based ByteDance to either sell its popular social media platform, TikTok, or see the platform banned in the US. The bill aims to address long-held national security concerns about ByteDance’s connection to the Chinese government and would give ByteDance six months to divest its ownership of TikTok. Despite overwhelming support in the House and assurance from President Biden that he would sign the bill, H.R. 7521 currently has no timeline for Senate action and would likely face extensive legal challenges if enacted. Senate Commerce Committee Chairwomen Maria Cantwell (D-WA) has called for additional hearings and potential revisions to the bill, while Senate Majority Leader Chuck Schumer (D-NY) hasn’t indicated whether he might bring the bill to the Senate floor.A week after the House passed H.R. 7521, it unanimously passed the first major data privacy bill in years. The Protecting Americans’ Data from Foreign Adversaries Act (H.R. 7520), would prohibit data brokers from selling Americans’ sensitive data to China, Russia, North Korea, Iran, and other countries deemed “foreign adversaries.” In a joint statement, House Energy and Commerce Committee Chair Cathy McMorris Rodgers (R-WA) and Rep. Frank Pallone (D-NJ) emphasized that H.R. 7521 builds on H.R. 7520 and serves as “an important complement to more comprehensive national data privacy.” The bill’s fate in the Senate remains uncertain, and there is no current timeline on Senate action. However, Sen. Maria Cantwell (D-WA), whose committee would have jurisdiction on the data broker bill, has shown her support.Stakeholder Response: In the hours following the House’s vote on the TikTok bill, Rep. Jeff Jackson (D-NC), who has amassed over 2.3 million followers on TikTok, took to the platform to discuss his support for the bill, arguing that the best case scenario is for TikTok to no longer be owned and potentially controlled by an “adversarial government.” Rep. Bill Pascrell (D-NJ) stated that the US has a “right to regulate a social media company controlled by the hostile Chinese regime that exercises broad power over American discourse and popular culture.” Similarly, the American Economic Liberties Project released a statement supporting the Foreign Adversary Controlled Applications Act citing national security concerns regarding Chinese ownership of TikTok and its ability to manipulate public opinion.Those in opposition of the TikTok bill have cited First Amendment concerns, as well as fear that the bill would negatively impact young voters who rely on the platform for news and hurt small businesses who depend on TikTok for marketing and sales. A group of public interest organizations including the American Civil Liberties Union, Center for Democracy & Technology, and the Electronic Frontier Foundation, among others, sent a letter to House Speaker Rep. Mike Johnson (R-LA) and House Minority Leader Rep. Hakeem Jeffries (D-NY) urging them to oppose the legislation. The public interest groups argued that the bill “would trample” the First Amendment rights of millions of people in the US and would set an alarming global precedent for “excessive government control over social media platforms.”In regard to H.R. 7521, privacy groups have welcomed the effort but suggested that it is not enough to reign in the data broker industry. Eric Null, co-director of the Privacy & Data Project at the Center for Democracy & Technology, suggested that a comprehensive data privacy bill could better protect “Americans’ data against a variety of harms by reducing the overall amount of data in the online ecosystem, instead of placing limits on one particular type of data sale between specific entities.”What We’re Reading: Gabby Miller covered the House’s passage of the TikTok bill at Tech Policy Press. The Washington Post warned that the sale of TikTok would likely cost over $100 billion, a price tag that only a few potential buyers could meet, which may lead to further antitrust scrutiny if TikTok were to be bought by a rival company. The Atlantic provided a deep dive into the underlying factors that led to the potential TikTok ban and the implications of a TikTok ban on US-China relations.

OMB Finalizes Guidance Memo on Federal AI Use

Summary: On March 28, OMB released its final guidance on federal agencies’ use of AI systems in accordance with the 150-day deadline set by President Biden’s AI Executive Order. Among other provisions, the memo mandates that agencies establish safeguards for AI uses that could impact Americans’ rights or safety, requires agencies to publicly disclose details about the AI systems that they are using and associated risks, and establishes a requirement for every federal agency to designate a chief AI officer to oversee AI implementation. OMB also announced that the National AI Talent Surge will hire at least 100 AI professionals to work within the federal government by this summer. Missing from OMB’s memo was concrete guidance on the federal procurement of AI systems, which OMB has promised to address “later this year.” To support their efforts, OMB issued an RFI regarding federal procurement of AI on March 29.Stakeholder Response: Civil society actors generally praised the OMB guidance and expressed positivity towards future implementation of the AI executive order. Alex Givens, president and CEO of the Center for Democracy & Technology told Politico Morning Tech that federal procurement is an ideal place for the government to influence and normalize “responsible standards for AI governance.” Maya Wiley, president and CEO of The Leadership Conference on Civil and Human Rights, praised the OMB guidance as “one step further down the path of facing a technology rich future that begins to address its harms,” emphasizing that tech policy must consider its impacts on real people. The Lawyers’ Committee for Civil Rights Under Law also celebrated the OMB guidance and called on Congress to regulate AI through legislation. Finally, Upturn commented that although the OMB guidance “contains some important measures” on AI testing and civil rights protections, “these measures are at risk of being undercut by potential loopholes.” In Tech Policy Press, Data & Society executive director and co-chair of the Rights, Trust and Safety Working Group of the National AI Advisory Committee Janet Haven wrote that while not perfect, the new OMB memo should leave anyone concerned about the impacts of AI on people, communities, and society feeling cautiously optimistic.What We’re Reading: The Verge discussed the responsibilities held by chief AI officers to implement OMB’s guidance and monitor agency use of AI systems. CNN reported on the OMB memo, putting in context of AI EO implementation so far. Bloomberg outlined the different components of the OMB guidance.Summary: In March, President Biden’s State of the Union address covered a wide range of issues, from immigration to IVF treatments. It also “marked the first time a president has uttered ‘AI’ during a State of the Union address, according to an analysis of transcripts from the American Presidency Project.” On this important stage, Biden called on Congress to take action on a series of tech policy issues, including passing bipartisan children’s online privacy legislation, leveraging the potential and minimizing the risks of AI, and outlawing AI voice impersonation.Stakeholder Response: Biden’s brief tech policy mentions in the State of the Union sparked mostly supportive responses from stakeholders. New America’s Open Technology Institute, indicated its support for strong privacy laws and algorithmic accountability mechanisms, naming the 2022 American Data Privacy and Protection Act (H.R. 8152) and Algorithmic Accountability Act (S. 2892 / H.R. 5628) as potential vehicles for online safety. Fei-Fei Li, co-director of the Human-Centered AI Institute at Stanford University, praised Biden’s mention of AI harms and the “double-edged sword nature of a powerful technology.” Finally, the International Association of Privacy Professionals, although in support of Biden’s call for children’s online safety, online privacy, and AI safety, emphasized the deadlock and lack of legislative momentum on tech policy issues generally.What We’re Reading: CNN tracked the topics covered by Biden in the State of the Union. The Wall Street Journal published a live analysis of the State of the Union. The New York Times covered Trump’s response to Biden’s speech.

Tech TidBits & Bytes aims to provide short updates on tech policy happenings across the executive branch and agencies, civil society, courts, and industry.

In the executive branch and agencies:

The White House released the president’s budget, which included a section on “tech accountability and responsible innovation in AI.” President Biden’s budget focuses on the “safe, secure, and trustworthy development and use of AI,” the new AI Safety Institute, a National AI Research Resource pilot, and AI integration and investments in AI and tech talent in the federal government.Recent appropriations included budget cuts for NIST and the National Science Foundation, sparking concern among lawmakers and experts. Even with President Biden’s proposed funding increases for these agencies in fiscal year 2025, the allocations fell short of what was authorized in the CHIPS and Science Act, indicating a significant gap in funding compared to the targets set by the legislation.The National Telecommunications and Information Administration (NTIA) released a report on AI accountability that included made eight sets of policy recommendations to improve AI transparency, set guidance on independent evaluations and auditing of AI systems, and establish consequences for entities that fail to mitigate risks and deliver on their commitments to develop safe and responsible AI.The Department of Homeland Security was the first in the federal government to publish a plan for integrating AI, aiming to leverage the technologies for crime prevention, disaster management, and immigration training. Through partnerships with tech firms like OpenAI, Anthropic, and Meta, DHS plans to implement AI tools using $5 million in pilot programs.Gladstone AI released a report commissioned by the US State Department assessing the proliferation and national security risks from “weaponized and misaligned AI.” The report included an analysis of “catastrophic AI risks,” and recommends a set of policy actions to disrupt the AI industry and ensure advanced AI systems are safe and secure.

In civil society:

Almost 50 civil society organizations including Mozilla, the Center for Democracy & Technology, and others, published a letter to US Secretary of Commerce Gina Raimondo highlighting the need for protecting openness and transparency in AI models.More than 350 leading stakeholders in the AI, legal, and policy community published a letter at MIT proposing that AI companies make policy changes to “protect good faith research on their models, and promote safety, security, and trustworthiness of AI systems.” The letter urged AI companies to support independent evaluation and “provide basic protections and more equitable access for good faith AI safety and trustworthiness research.”A coalition of civil society organizations including Data & Society, Center for Democracy & Technology, ACLU, and AFL-CIO Technology Institute published a letter to US Secretary of Commerce Gina Raimondo calling on NIST and the AI Safety Institute to focus on the current harms of AI by focusing on algorithmic discrimination and utilizing evidence-based methodologies for harm reduction.The Center for Democracy & Technology (CDT) published an article in support of ensuring NIST’s AI Safety Institute Consortium is a meaningful hub for multi-stakeholder engagement to address evolving risks posed by the development of AI systems. CDT also shared multiple suggestions with NIST to consider ensuring the consortium is as inclusive and effective as possible in mitigating the risks and harms of AI.The Stanford Institute for Human-Centered Artificial Intelligence published a white paper titled “Exploring the Impact of AI on Black Americans: Considerations for the Congressional Black Caucus’s Policy Initiatives” in collaboration with Black in AI on the potential impacts of AI on racial inequalities as well as where it can benefit Black communities.Lawrence Norden and David Evan Harris published an article with the Brennan Center for Justice critiquing last month’s industry accords to combat the deceptive use of AI in the upcoming 2024 elections, and suggested reporting requirements to ensure companies are adhering to their commitments.The Integrity Institute released a report titled “On Risk Assessment and Mitigation for Algorithmic Systems,” which provides policymakers with a framework for effective risk assessments to minimize the risk and harms that stem from online platforms.Jenny Toomey, director of the Ford Foundation’s Catalyst Fund, published an article in Fast Company comparing today’s rapid AI transformation to the digital music transformation in the early 2000s.The Kapor Foundation, in partnership with Hispanic Heritage Foundation, Somos VC and Congressional Hispanic Caucus Institute, published “The State of Tech Diversity: The Latine Tech Ecosystem,” a report that outlines the ways in which Latino communities have been excluded from the tech industry and presents the solutions for bringing more tech opportunities to Latino populations.

In courts:

A federal judge in California dismissed X’s lawsuit against the Center for Countering Digital Hate (CCDH). The court ruled that CCDH’s findings, which claimed that there was a rise of hate speech on X following Elon Musk’s takeover, were protected under law and did not unlawfully impact X’s business dealings.

In industry:

Meta announced the discontinuation of CrowdTangle, a data tool widely used by researchers and journalists to monitor content spread on Facebook and Instagram. CrowdTangle will be replaced by the Meta Content Library, which will only be available to academics and nonprofits, cutting off access to news organizations. This shift has sparked concerns among researchers about limitations in studying the effects of social media, especially in the lead-up to the 2024 elections. A coalition of over 150 organizations published an open letter calling for Meta to keep CrowdTangle online until January 2025, help transition researchers to Meta’s new content library, and make the content library tool more effective for election monitoring.Microsoft software engineer Shane Jones sent a letter to FTC Chair Lina Khan and Microsoft’s board of directors saying that the company’s image generation AI created violent and sexual images as well as copyrighted material. Microsoft responded to Jones’ claims by reaffirming it has “in-product user feedback tools and robust internal reporting channels” in place to validate and test Jones’ findings.Over 400 stakeholders including OpenAI, Meta, Google, Microsoft, and Salesforce, among others, signed an open letter committing to develop AI for a better future. The letter is another effort by the tech industry to highlight a collective pledge to develop AI responsibly in order to “maximize AI’s benefits and mitigate its risks.”Over 90 biologists specializing in AI-driven protein design signed an agreement to ensure that their research has benefits that outweigh potential harm, especially regarding potential dual uses for bio-weapon creation. The agreement focuses on promoting beneficial AI research while advocating for security and safety measures to mitigate potential risks.During their 2024 policy summit, the tech trade association INCOMPAS, which includes members such as Meta and Microsoft, launched the INCOMPAS Center for AI Public Policy and Responsibility designed to create a “large consensus” within industry for lawmakers to have clarity on AI rules and bolster AI safety. The effort will seek to develop a federal framework to propel the US ahead of China in AI innovation and increase competition and opportunities for Americans in the AI sector.Digital World Acquisition Corp. approved a merger with former President Donald Trump’s social media startup Truth Social after receiving approval from the SEC in February. The merger would make Truth Social a public company and potentially earn Trump $3.5 billion, but it is already facing multiple lawsuits from former stakeholders.The Office of Critical and Emerging Technologies within the Department of Energy issued an RFI soliciting input on various topics to inform a public report mandated by the AI Executive Order on AI’s role in enhancing electric grid infrastructure. They seek information on AI’s potential to improve planning, permitting, investment, and operations for the grid, ensuring clean, affordable, reliable, resilient, and secure power for all Americans. The comment period ends on April 1.The White House Office of Budget and Management issued an RFI to inform its development of guidelines for the responsible procurement of AI by the federal agencies. The comment period ends on April 29.

Other New Legislation and Policy Updates

Foreign Adversary Communications Transparency Act (H.R. 820, sponsored by Reps. Elise Stefanik (R-NY), Mike Gallagher (R-WI), and Ro Khanna (D-CA)): This bill would require the FCC to publish a list of entities that hold a license granted by the FCC and that have ties to countries specified as foreign adversaries. The bill was reported unanimously from the House Energy and Commerce Communications and Technology Subcommittee and the House Energy and Commerce Committee. No amendments were introduced during mark-up sessions.Countering CCP Drones Act (H.R. 2864, sponsored by Reps. Elise Stefanik (R-NY) and Mike Gallagher (R-WI)): This bill would require the FCC to add Da-Jiang Innovations (DJI) to the FCC Covered List, prohibiting DJI technologies from operating on US communications infrastructure. The bill was reported unanimously by the House Energy and Commerce Communications and Technology Subcommittee and the House Energy and Commerce Committee. No amendments were introduced during mark-up sessions.

The following bills were introduced in March:

Promoting United States Leadership Standards Act of 2024 (S. 3849, sponsored by Sens. Mark Warner (D-VA) and Marsha Blackburn (R-TN)): This bill would require NIST to provide Congress with a report on US participation in the development of AI standards and produce another report that assesses a potential pilot program that would award $10 million in grants over four years dedicated to the “hosting of standards meetings in the US.” The bill is designed to improve US leadership in international standards-setting for emerging technologies.Federal AI Governance and Transparency Act (H.R.7532, sponsored by Reps. James Comer (R-KY), Jamie Raskin (D-MD), Nancy Mace (R-SC), Alexandria Ocasio-Cortez (D-NY), Clay Higgins (R-LA), Gerald Connolly (D-VA), Nicholas Langworthy (R-NY), and Ro Khanna (D-CA)): This bill would codify federal standards for responsible AI use, enhancing oversight and transparency. It strengthens the authority of OMB to issue government-wide policy guidance and mandates governance charters for high-risk AI systems. Additionally, it would create public accountability mechanisms, including a notification process for those affected by AI-influenced agency determinations.Verifying Kids’ Online Privacy Act (H.R.7534, sponsored by Rep. Jake Auchincloss (D-MA)): This bill would amend the Children’s Online Privacy Protection Act of 1998 to enhance protections for children by mandating that operators verify the age of users. Additionally, it would establish a Children Online Safety Fund, in which civil penalties collected by federal entities would be deposited, to distribute grants to local educational agencies for youth online safety programming.Transformational AI to Modernize the Economy against Extreme Weather Act (S.3888, sponsored by Sens. Brian Schatz (D-HI), Ben Ray Luján (D-NM), and Laphonza Butler (D-CA)): This bill would mandate that federal agencies, including NOAA and the Departments of Energy and Agriculture, utilize AI for enhanced extreme weather prediction and response. The bill targets improved weather forecasting, electrical grid optimization, and addressing illegal deforestation, while promoting public-private partnerships to drive weather tech innovation.Protect Victims of Digital Exploitation and Manipulation Act of 2024 (H.R.7567, sponsored by Reps. Nancy Mace (R-SC), Anna Luna (R-FL), Matt Gaetz (R-FL), and Bob Good (R-VA)): This bill would introduce measures to prohibit the production or distribution of digital forgeries of intimate visual depictions without consent, except in specified circumstances such as law enforcement or legal proceedings. It also includes clear definitions for key terms, such as digital forgery and consent, and would establish extraterritorial jurisdiction.Preparing Election Administrators for AI Act (S.3987, sponsored by Sens. Amy Klobuchar (D-MN) and Susan Collins (R-ME)): This bill would require the Election Assistance Commission (EAC), in consultation with NIST, to create voluntary guidelines for election offices. These guidelines would cover AI use in election administration, cybersecurity, information sharing, and combatting election-related disinformation.No Robot Bosses Act (H.R.7621, sponsored by Reps. Suzanne Bonamici (D-OR) and Christopher R. Deluzio (D-PA)): This bill would protect job applicants and workers by prohibiting employers from solely relying on automated decision systems for employment decisions, including hiring, disciplinary actions, and firing. It also would require employers to provide pre-deployment testing and training for these systems, ensure human oversight, and disclose system usage, and would establish a division at the Department of Labor for oversight.

Public Opinion on AI Topics

A HarrisX survey of 1,082 US adults conducted from March 1-4, 2024, asked respondents about their perspectives on various AI regulations. They found that:

A majority of respondents express support for regulation requiring labeling of AI-generated content across various formats. Roughly three out of four respondents favor labeling for fully AI-created videos, and photos. Additionally, 71 percent of respondents support labeling for photos and videos containing AI-generated elements.76 percent of respondents also express a desire for government regulations to protect jobs.Given a list of potential regulations, the creation of accountability rules holding developers responsible for AI outputs ranked as the first choice, with 39 percent of respondents ranking it among their top three choices.

A global survey conducted by MIT Technology Review Insights in November and December 2023 among 300 business leaders found:

78 percent of the leaders polled view generative AI as a competitive opportunity rather than a threat, with 65 percent actively considering innovative ways to leverage it.Despite 76 percent of companies represented by respondents having some exposure to generative AI in 2023, only 9 percent widely adopted the technology.77 percent of respondents cite regulatory, compliance, and data privacy concerns as a primary barrier to rapid AI adoption.20 percent of respondents’ companies used generative AI for strategic analysis in 2023, but 54 percent of respondents say they plan to adopt it for this purpose in 2024.

A global survey by Edelman from November 3-22, 2023, with 32,000 respondents across 28 countries found the following:

53 percent of respondents say they trust AI companies, marking a decline from 61 percent five years ago.The US especially experienced a significant decline: 35 percent of US respondents now trust AI companies, compared with 50 percent five years ago.Trust in the technology sector overall is at 76 percent of respondents, but trust in AI/ML innovation specifically is lower at 50 percent.30 percent of those surveyed accept AI innovation, while 35 percent reject it. Some cite a lack of understanding from government regulators (59 percent) as a key reason.Trust in AI in the US differs across political lines, with Democrats at 38 percent, independents at 25 percent, and Republicans at 24 percent.Technology’s lead as the most trusted sector has declined, being the most trusted in only half of the countries studied compared to eight years ago when it led in 90% of Edelman’s studied countries.

In a survey conducted by IEEE among 9,888 active members in March 2024, including those working on AI and neural networks:

About 70 percent of respondents express dissatisfaction with the current regulatory approach to AI.93 percent of respondents support protecting individual data privacy and advocate for regulations to combat AI-generated misinformation.84 percent of respondents favor mandatory risk assessments for medium- and high-risk AI products, with 80 percent advocating for transparency or explainability requirements for AI systems.83 percent of respondents believe the public is insufficiently informed about AI.

Public Opinion on Other Topics

Data for Progress and Accountable Tech surveyed 1,184 US adults from February 16-19, 2024, on regulation of large tech companies, finding:

36 percent of people polled express concern about companies hiding their deregulation attempts by using industry lobbying groups to file lawsuits.77 percent of respondents are concerned about Big Tech suing the FTC to continue profiting from data collected from minors, while 76 percent are concerned about lawsuits to overturn state laws protecting minors’ data privacy on social media platforms.55 percent of respondents indicate they would be less likely to use Meta’s platforms if the company sued to overturn federal laws protecting consumer privacy and children’s safety.

Pew Research Center conducted a survey of 1,453 US teens aged 13 to 17 and their parents from September 26 to October 23, 2023, on smartphone usage. The poll found the following:

38 percent of teens surveyed feel they spend too much time on their smartphones, with 27 percent expressing the same sentiment about social media. 51 percent of teens surveyed believe the time spent on their phones is appropriate, with 64 percent feeling this way about their social media use.63 percent of teen respondents report not having imposed limits on their smartphone use, while 60 percent have not restricted their social media usage.While 72 percent of teen respondents sometimes or often feel peaceful without their phone, 44 percent experience anxiety.70 percent of teens surveyed believe smartphones offer more benefits than harms for people their age. 69 of teens feel smartphones facilitate pursuing hobbies and interests, but 42 percent believe they hinder the development of good social skills.50 percent of parents report having checked their teen’s phone and 47 percent impose time limits on phone use.

Data for Progress and Accountable Tech surveyed 1,184 US adults from February 16 – 19, 2024, which revealed the following findings:

36 percent of people polled express concern about companies hiding their deregulation attempts by using industry lobbying groups to file lawsuits.77 percent of respondents are concerned about Big Tech suing the FTC to continue profiting from data collected from minors, while 76 percent are concerned about lawsuits to overturn state laws protecting minors’ data privacy on social media platforms.55 percent of respondents indicate they would be less likely to use Meta’s platforms if the company sued to overturn federal laws protecting consumer privacy and children’s safety.

A survey conducted by the Chamber of Progress among 2,205 adults between January 30-February 2, 2024, revealed the following findings:

44 percent of respondents believe online platforms should be required to verify a user’s age using personal data, while 56 percent prefer using publicly available or user-provided information not stored.45 percent of people surveyed trust online platforms to keep their data safe, while 55 percent do not trust them much or at all.If required to provide personal information to log in, 60 percent of people polled would limit or modify their internet usage, while 19 percent would not and 20 percent have no opinion.87 percent of respondents are very or somewhat concerned about their personal data being shared online, while 10% percent are not concerned much or at all.85 percent of people surveyed are very or somewhat concerned about children’s personal data being shared online, while 9 percent are not concerned much or at all.

We welcome feedback on how this roundup could be most helpful in your work – please contact Alex Hart with your thoughts.