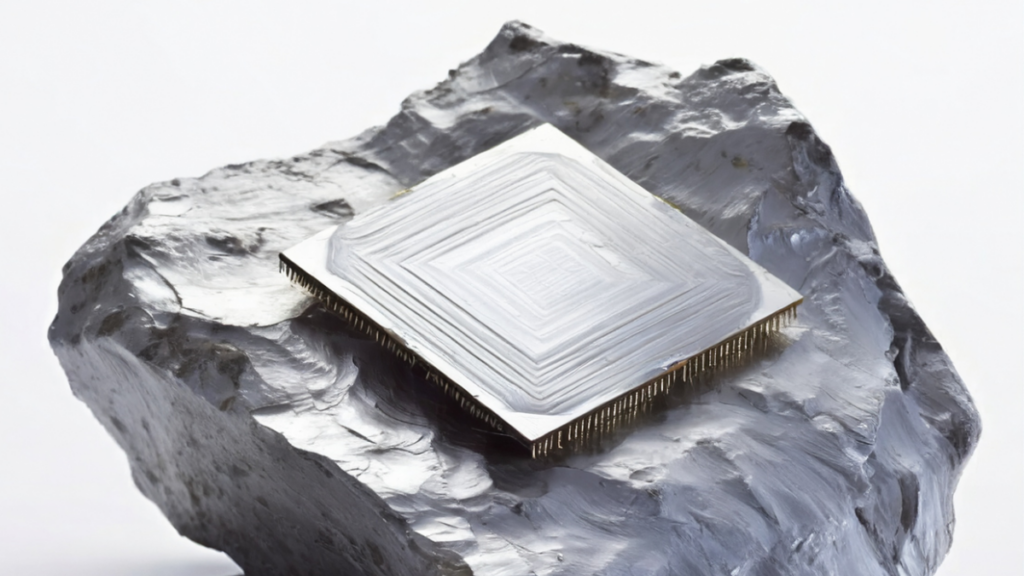

Kathryn Breslin and team, Adobe Firefly / AI for Better Images / Silicon Chipping / CC-BY 4.0

Governments often talk about restricting access to silicon chips, and in the case of the United States, some have even enacted laws to do so, but few outside of industry experts talk about the details of chips. In policy circles, chips are treated as black boxes that humans cannot understand.

The workings of a chip are indeed microscopic and invisible to the naked eye. But they also rely on understandable technological choices that have been accumulated over many years in the computer industry. Digging deeper into this subject opens up unexpected opportunities, hitherto underexplored, to democratically shape the industry in the context of AI.

more more

The fundamental driving force of the IT industry has been the pursuit of more and more computing power by packing more and more transistors onto a chip, and while Moore’s law is not as prominent as it once was, his underlying spirit remains with us today.

If we could change that indicator – if, as I argue, we could put a limit on it – we might see dramatic positive effects not only in energy consumption but also in other areas, such as reducing costs and opening up markets to new entrants.

Freed from the dogma of packing transistors into smaller and smaller areas, the chip industry is also offering interesting new paradigms of innovation that have previously been pushed aside.

It is unlikely that industry incumbents will move in this direction on their own. But I still believe this idea is not utopian. I am optimistic because the science and technology policy community is gradually realizing that AI can be regulated. This is not an unprecedented phenomenon, but something more familiar and therefore guidable.

One piece of evidence supporting this view is the discussion of IT’s excessive energy and resource requirements – evidence of the desire to talk about AI in material terms.

The second piece of evidence comes from a careful reading of some of the new AI regulations.

For example, the European Union’s recent AI law requires developers to disclose the “estimated energy consumption” of their AI models, while requiring the European Commission to “report on the energy-efficient development of general-purpose AI models” and to assess the need for further measures or actions, including binding measures or actions.”

While we are still a long way from legally enforceable oversight with data reporting requirements, this is the start of a process of change that could unfold over the next decade.

Technology that matters to governments

Silicon chips are a key government technology, both useful devices and symbols of national prestige: like nuclear reactors, jet engines, and space rockets, but unlike many other important technologies, these ingenious devices would never have been invented or widespread without the desire of government elites.

Semiconductors were born out of the US military-industrial complex in the last century, but more recently have been adopted by state-owned enterprises or East Asian companies with historically close ties to the state.

Manufacturing is concentrated in a few large companies whose political immobility is almost synonymous with the countries of production, but similarly, the structure of the sector is highly legible.

Rube Goldberg Machine

Materialities such as power and energy have always been top of mind in this specialized industry, and over time, leading companies have developed chips that are more energy efficient at performing calculations.

According to Koomey’s Law, since the advent of electronic computers, the amount of computing power per kWh has grown exponentially, doubling on average every 1.57 years between 1946 and 2009 (slowing to 2.29 years between 2008 and 2023, citing Prieto et al., 2024).

But this efficiency increase wasn’t due to any focus on energy, but rather an overwhelming obsession with packing transistors ever more densely, which incidentally also led to increased efficiency.

Conversely, hypothetical efforts to reduce energy usage would have yielded different and interesting results (the archives of the seminal Hot Chips symposium show some paths that were not taken, such as slow computing).

Packing transistors into ever-smaller spaces increased the amount of heat generated at the junctions, and much of the industry was dedicated to solving this problem, likely at considerable but unknown opportunity cost.

When the movement of electrons powers the chip, it creates lattice vibrations that generate heat. And this heat is important: Thermodynamically, nearly all of the power delivered becomes heat.

In densely packed integrated circuits, heat cannot spread out. The denser the chips, the more severe the problem becomes, and the less impact it has on ceramic components, the more the surroundings melt, reducing reliability and causing system failure.

As the number of components in a circuit increases, the chances of an individual component failing also begin to increase, purely as a numbers game.

The growing problem was recognized in the late 1970s and has only gotten worse over the years since, especially since the casual correlation between size and power density (Denard scaling) broke down.

The authors of a popular textbook on thermal management of chips noted in 2006 that “it won’t be long before the power density of microprocessors rivals that of nuclear reactors or rocket nozzles.”

The answer to the thermal problem was a Rube Goldberg solution that combined fans, copper heat pipes, and thermally conductive interface materials such as thermal paste (or more recently, liquid coolants).

The term Rube Goldberg is carefully chosen: a concise article by Professor DDL Chung, one of the leading scientists in the field of thermal paste, highlights the difficulty of scientifically evaluating such interface materials.

When Professor Kunle Olukotun invented the multi-core chip, he got around the heat problem from a different direction, by increasing the number of cores per die and unpacking the transistors to some extent.

Graphics processing units (GPUs) have the advantage of being much less dense and less heat-intensive than CPUs. Bitcoin mining and then AI have given rise to new uses for GPUs beyond their original niche use in gaming.

Political Economy

It’s fair to say that the pursuit of computing power has long since gotten out of control: Chips are made by passing electricity through tiny ceramics and heating them to high temperatures, which has created many new problems and necessitates stopgap measures rather than permanent solutions.

In response, some policymakers are arguing for a radical overhaul of the entire energy infrastructure to keep up with the new energy demands of both power chips and the cooling systems that support them.

This means huge centralized data centers like the old mainframes, rather than decentralized computing devices which in theory would be more democratic.

It is time to recognize the causes of this phenomenon and set limits.

My suggestion is that we need to think about computational sufficiency – neither more nor less than necessary – in light of an assessment of our computational needs and available resources.

This means reconnecting the basic currency of computation, the silicon/silicon dioxide bandgap (Carver-Mead’s term), to the problems we want or need to solve.

In the early days of electronic computing, the connection was obvious: computers, right down to the last valve, were tied to specific problems, like wartime code-breaking.

But the psychological connection between physical materiality and its use has been largely relegated to the background in complex ways that are very difficult to trace.

This is as much a question of political economy as it is of technology, or a combination of both. The possibilities cannot be defined in advance, even if imperfectly, but will become clear in the throes of regulatory efforts.