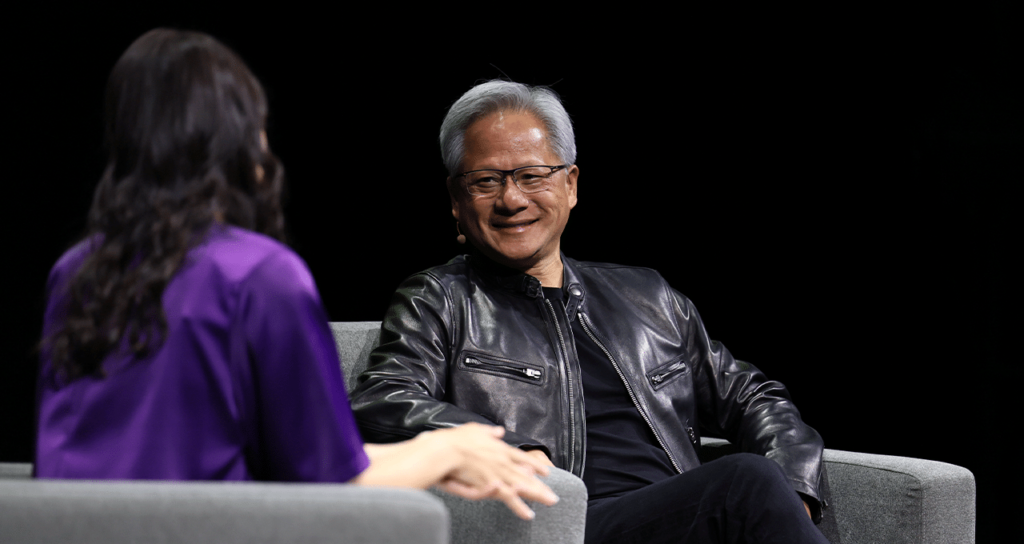

Nvidia founder and CEO Jensen Huang said on Monday that the generative AI revolution, which has deep roots in visual computing, is amplifying human creativity while accelerated computing promises huge gains in energy efficiency.

That makes the SIGGRAPH Professional Graphics Conference taking place in Denver this week the perfect forum to discuss what lies ahead.

“Everyone will have an AI assistant,” Huang said. “Every company, every job will have an AI assistant.”

But while generative AI is expected to increase human productivity, the high-speed computing technologies that underpin it are expected to make computing more energy efficient, Huang said.

“Accelerated computing helps us save huge amounts of energy — 20 times, 50 times more — to do the same work,” Huang said. “The first thing we have to do as a society is to make all applications as fast as we can, which will reduce the amount of energy consumed around the world.”

This conversation follows a series of announcements from NVIDIA today.

NVIDIA unveiled a new suite of NIM microservices tailored to diverse workflows, including OpenUSD, 3D modeling, physics, materials, robotics, industrial digital twins and physics AI.

These advancements are aimed at empowering developers, especially with the integration of Hugging Face Inference-as-a-Service on DGX Cloud.

Additionally, Shutterstock launched its Generative 3D service and Getty Images upgraded its services with NVIDIA Edify technology.

In the AI and graphics space, NVIDIA announced new OpenUSD NIM microservices and reference workflows designed for generative physics AI applications.

This includes programs to accelerate the development of humanoid robots, including through new NIM microservices for robot simulation.

Finally, WPP, the world’s largest advertising agency, is using Omniverse-powered generative AI for The Coca-Cola Company to increase brand authenticity, showcasing the practical application of NVIDIA’s AI technology advancements across industries.

Huang and Good began their conversation by exploring how visual computing has given rise to everything from computer games to digital animation, GPU-accelerated computing, and more recently, the generative AI running in industrial-scale AI factories.

All these advancements build on each other: in robotics, for example, we need advanced AI and photorealistic virtual worlds to train the AI before deploying it in the next generation of humanoid robots.

Huang explained that robotics requires three computers: one to train the AI, one to test it in a physically accurate simulation, and one that lives inside the robot itself.

“Almost every industry will be affected by this, from scientific computing to improve weather forecasting using less energy, to greater collaboration with creators for image generation, to virtual scene generation for industrial visualization,” Huang said. “Robots and self-driving cars will all be transformed by generative AI.”

Similarly, the NVIDIA Omniverse system, built on the OpenUSD standard, is key to leveraging generative AI to create assets that can be used by the world’s biggest brands.

Capturing Brand Assets By drawing on the brand assets present in Omniverse, these systems can capture and replicate carefully curated brand magic.

Ultimately, all of these systems – visual computing, simulation, large-scale language models – will come together to create digital humans that can help us interact with all kinds of digital systems.

“One of the things we’re announcing here this week is the concept of digital agents, digital AI that powers every job in our company,” Huang said.

“And one of the most important use cases that people are discovering is customer service,” Huang says. “I think in the future, it’s going to be humans in charge, but with AI involved.”

All of this, like other new tools, promises to increase human productivity and creativity. “Imagine the stories we could tell with these tools,” Huang says.